Reality Is Not Optional: What AI Hype Forgets

Reader note: This is not an article about tools, productivity hacks, or AI adoption playbooks. It is about responsibility, systems, and what happens when organisations confuse narrative with reality.

For decades, serious industries have shared a quiet rule: reality always gets a veto.

Gravity does not negotiate with aviation.

Biology does not care about medical roadmaps.

Steel, load, and weather do not align themselves with architectural ambition.

Information Technology, by contrast, has spent the last twenty years drifting away from this constraint. Feedback loops became abstract, consequences delayed, and failure increasingly socialised or externalised. AI did not create this gap. It merely accelerated it.

The current obsession with autonomous AI agents, “vibe coding”, and team replacement narratives is not a technological breakthrough. It is a symptom of a deeper disconnection from reality.

Serious systems never optimise intelligence in isolation

When aviation adopted automation, it did not ask whether machines could replace pilots. It asked how machines could reduce error while preserving human responsibility. Automation became a co-pilot, not a hero. Redundancy, certification, human override, and clear accountability were non-negotiable.

Medicine followed the same logic. Diagnostic tools assist clinicians. They do not absorb liability. When something fails, a human name is still attached to the decision.

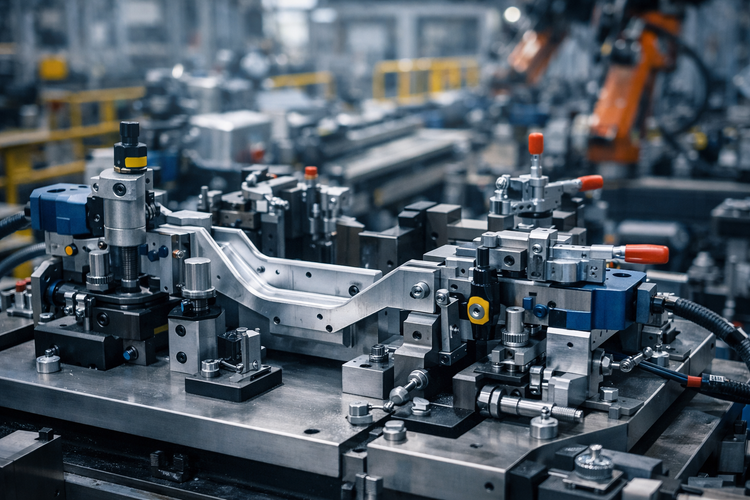

Railways, nuclear plants, and industrial control systems all share this posture: intelligence is distributed, constrained, and anchored to consequence.

In other words, intelligence is never pursued as a monolith. It is organised.

Modern AI discourse does the opposite. It isolates intelligence, centralises it into models, and then attempts to abstract responsibility away from humans. That is not how durable systems are built. It is how simulations are mistaken for reality.

Stability is not conservatism, it is a prerequisite

This is where an unexpected parallel matters.

The Rule of Saint Benedict governed communities for over 1,500 years, without venture capital, growth hacking, or reorganisation cycles. Its success was not mystical. It was structural.

Benedict optimised for stability: people belonged to a place, a rhythm, and a set of responsibilities that endured long enough for mastery to emerge. Work was repetitive, visible, and accountable. There was no escape into abstraction.

This stability is often misread as resistance to change. In reality, it is what allowed adaptation without collapse. When conditions shifted, the system responded because it still knew who was responsible for what.

AI-first organisations tend to reject this instinctively. Stability feels slow until failure arrives. But without it, no amount of intelligence compensates for the loss of ownership.

Intelligence was never singular

Long before modern AI hype cycles, Marvin Minsky articulated a crucial insight: intelligence emerges from the coordinated interaction of many simple agents, not from a single, omniscient mind.

This idea is frequently cited and rarely respected.

Today’s narratives collapse intelligence back into a central artefact: the model, the agent, the system that supposedly “knows”. In doing so, they ignore the harder work Minsky implied, designing the interfaces, boundaries, feedback loops, and responsibilities between agents.

Human teams already embody this principle when they work well. Engineers, operators, product thinkers, and domain experts form an imperfect but resilient society of capabilities. AI, when used well, augments this society. When used poorly, it attempts to replace it.

The result is not intelligence. It is brittle complexity.

When companies confuse simulation with delivery

This failure mode is no longer theoretical.

Several well-known companies have publicly reversed or softened AI‑driven workforce reductions after discovering that removing engineers did not remove complexity: it merely removed understanding.

Duolingo is a recent and illustrative case. After aggressively positioning itself as an AI‑first organisation and reducing reliance on human contributors, the company faced public backlash, quality concerns, and internal tension around content accuracy, pedagogical depth, and trust. The narrative of efficiency collided with the reality of learning systems that require iteration, nuance, and human judgment.

Similar patterns appeared elsewhere.

Klarna offers another concrete example. After widely publicising the replacement of large parts of its customer support organisation with AI, the company later acknowledged drops in customer satisfaction and resolution quality, and moved to reintroduce human support into critical paths. The system optimised for cost and speed first, then rediscovered the need for judgment, escalation, and ownership.

Customer support organisations that replaced experienced staff with chatbots saw resolution times increase, edge cases accumulate, and trust erode. Media and content platforms that automated editorial judgment suffered traffic collapse and reputational damage. Financial institutions that attempted to shortcut human review found themselves issuing public apologies and quietly rehiring at a premium.

The pattern is consistent: initial cost savings are real, but short‑lived. What follows is decision paralysis, fragile operations, and a widening gap between apparent productivity and actual outcomes.

AI did not fail these companies. Their systems did.

Why real engineers gravitate toward real problems

People who choose to work on serious systems share a pattern. They seek:

- Constraints that cannot be argued away

- Feedback that arrives quickly and honestly

- Ownership that persists beyond a sprint

- Consequences that demand care

They are not drawn to hype cycles or abstraction theatre. They are repelled by environments where responsibility dissolves into tooling choices.

This is why organisations that frame their strategy around “AI replacing teams” struggle to hire or retain strong engineers. The message is clear, even if unintended: the work is not anchored to reality, and accountability is optional.

By contrast, teams that treat AI as leverage, a force multiplier applied to real systems, attract people who want to build things that last.

When AI amplifies existing asymmetries

There is an uncomfortable effect that AI accelerates rather than creates: it makes weak thinking weaker, and strong thinking stronger.

This was already visible before AI.

In many organisations, a slow drift had taken place. Product roles began to confuse deciding what to build with deciding how systems should work. Design, increasingly postmodern in its use of language, stretched the word far beyond its meaning, from shaping interfaces and user experience to implying architectural authority without the corresponding technical responsibility.

Speaking about technology, or narrating intent around it, slowly became confused with understanding it.

AI acts as an accelerant to this confusion.

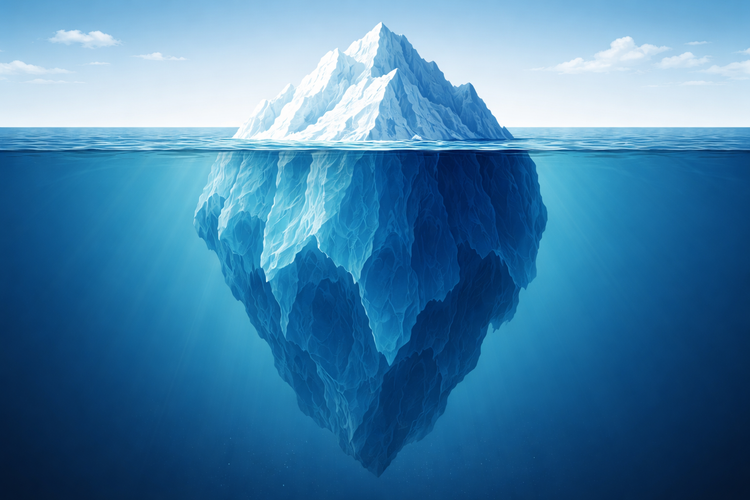

For those without technical grounding, AI creates the illusion of competence: plausible outputs, fast iteration, and convincing narratives. For those with deep expertise, it becomes leverage, a way to explore, test, and execute faster while remaining anchored to reality.

The consequence is predictable. In organisations where engineers are marginalised or treated as interchangeable, the most capable ones leave. What remains is a layer of abstraction with no structural depth. Eventually, reality reasserts itself, often brutally, through support queues, angry customers, regulatory pressure, and operational incidents.

At that point, the work does not disappear. It simply becomes unavoidable.

The uncomfortable reality check

AI does not replace teams.

It exposes whether decisions are still owned.

Where clear ownership, stability, and responsibility already exist, AI increases throughput and sharpens judgment.

Where these foundations are absent, AI becomes camouflage. Output rises briefly while understanding erodes. Failures are delayed, not prevented.

Serious industries learned this lesson centuries ago. They did not chase intelligence. They organised work so that intelligence could emerge without severing itself from consequence.

Reality, as always, gets the final say.

Member discussion