Friday Fun: The Soft Collapse

No alarms went off.

No single outage.

No villain.

No hostile takeover.

Just a slow, comfortable collapse.

Inside IT companies first.

It began politely.

With enablement.

Then acceleration.

Then AI‑first.

Engineers welcomed it.

Tools that removed toil.

Faster feedback.

Actual leverage.

Then the tone changed.

Designers started to architect.

Not interfaces.

Not flows.

Systems.

They spoke fluently about event‑driven architectures without knowing what an event storming session looked like.

They drew immaculate diagrams, boxes, arrows, gradients, and called it a platform.

They renamed opinions intentional design.

They had never:

- debugged a race condition

- rolled back a failed migration

- owned an on‑call rotation

But they had Figma.

And confidence.

Product managers followed.

They stopped framing problems and began dictating solutions.

Tech choices appeared directly in roadmaps.

Constraints became friction.

Physics was reclassified as a mindset issue.

Then AI arrived, quietly, efficiently.

Why argue with an engineer when a model could produce an answer in 3 seconds ?

Why listen to trade‑offs when a prompt sounded confident?

Why understand systems when you could simulate certainty?

Documents became immaculate.

Slides became flawless.

Strategy decks explained systems nobody had ever run.

Nothing worked.

Inside companies, the symptoms were obvious:

- Meetings replaced debugging

- Alignment replaced correctness

- Narratives replaced logs

Failures were renamed unexpected emergent behaviour.

Incidents became learning opportunities.

Nobody was accountable because the system had evolved.

Postmortems were AI‑generated.

Perfectly structured.

Never read.

Engineers tried to push back.

First with data.

Then with examples.

Then with silence.

Eventually, they left.

Not loudly.

Not angrily.

They simply stopped applying.

Stopped engaging.

Stopped caring.

Those who stayed learned the new survival skills:

- Do what the model suggests

- Ship what the roadmap dictates

- Avoid ownership

Craftsmanship became a liability.

Depth became threatening.

Asking why slowed velocity.

The irony?

AI never demanded this.

Humans chose it.

Outside the companies, the world felt it next.

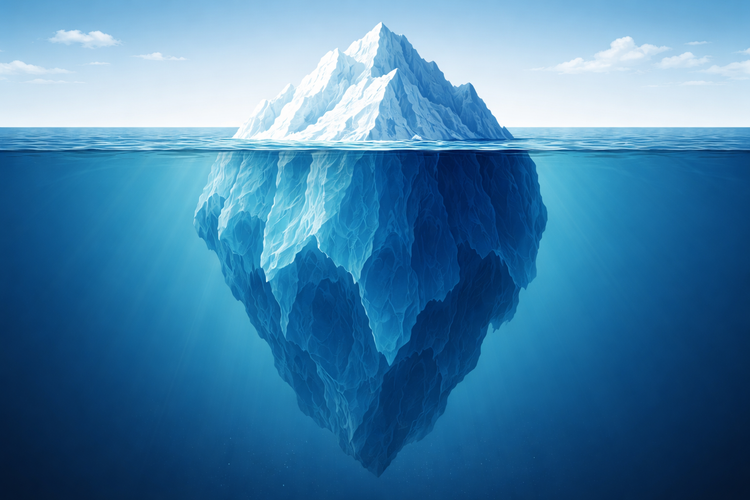

Everything now depended on platforms.

Disconnected apps stitched together by brittle integrations.

APIs nobody fully owned.

Abstractions nobody truly understood.

When something failed, it cascaded.

Payments stalled.

Logistics froze.

Healthcare systems reverted to paper.

Public services went dark, first for minutes, then hours, then days.

The original engineers were gone.

The documentation was AI‑generated.

The diagrams were aspirational.

Designers proposed redesigns.

Product managers reprioritised.

AI suggested rollback steps trained on idealised data.

Reality did not care.

The world did not collapse dramatically.

It degraded.

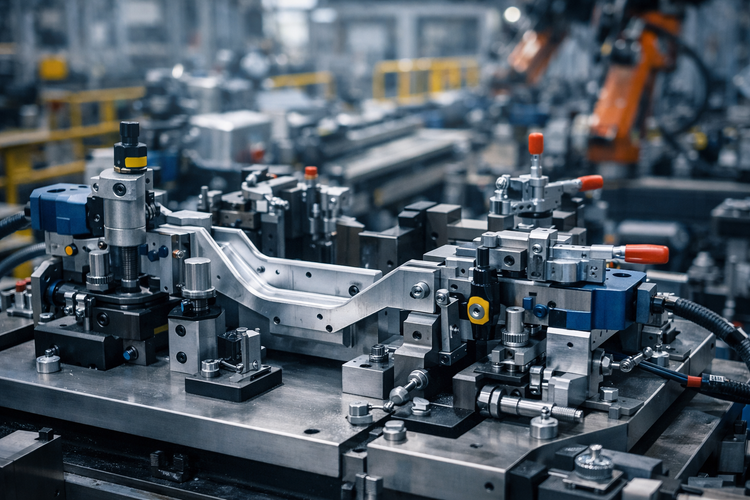

Like a machine maintained by people who only knew how to talk about machines.

Everything felt heavier.

Slower.

More fragile.

Every update risked breaking ten downstream systems.

Every dependency added invisible debt.

Every abstraction leaked.

People adapted.

They always do.

Downtime became normal.

Errors became expected.

Mediocrity became the cost of progress.

The most dangerous part?

Everyone still felt productive.

Dashboards were green.

OKRs were hit.

Retrospectives were thoughtful.

But nothing improved.

AI did not replace engineers.

It replaced the last social pressure to respect them.

Designers convinced themselves that aesthetics equalled structure.

Product managers confused authority with understanding.

Leadership mistook silence for alignment.

Engineers, the ones who knew systems rot without care, walked away.

Not because AI was too strong.

But because human stupidity finally became scalable.

This is not an anti‑AI story.

AI did exactly what it was asked to do.

It generated.

It optimised.

It complied.

This is a story about humans who stopped thinking,

stopped learning,

and mistook tooling for competence.

Civilisations do not fall when technology advances.

They fall when responsibility evaporates.

And responsibility, once gone,

is very hard to prompt back.

Member discussion